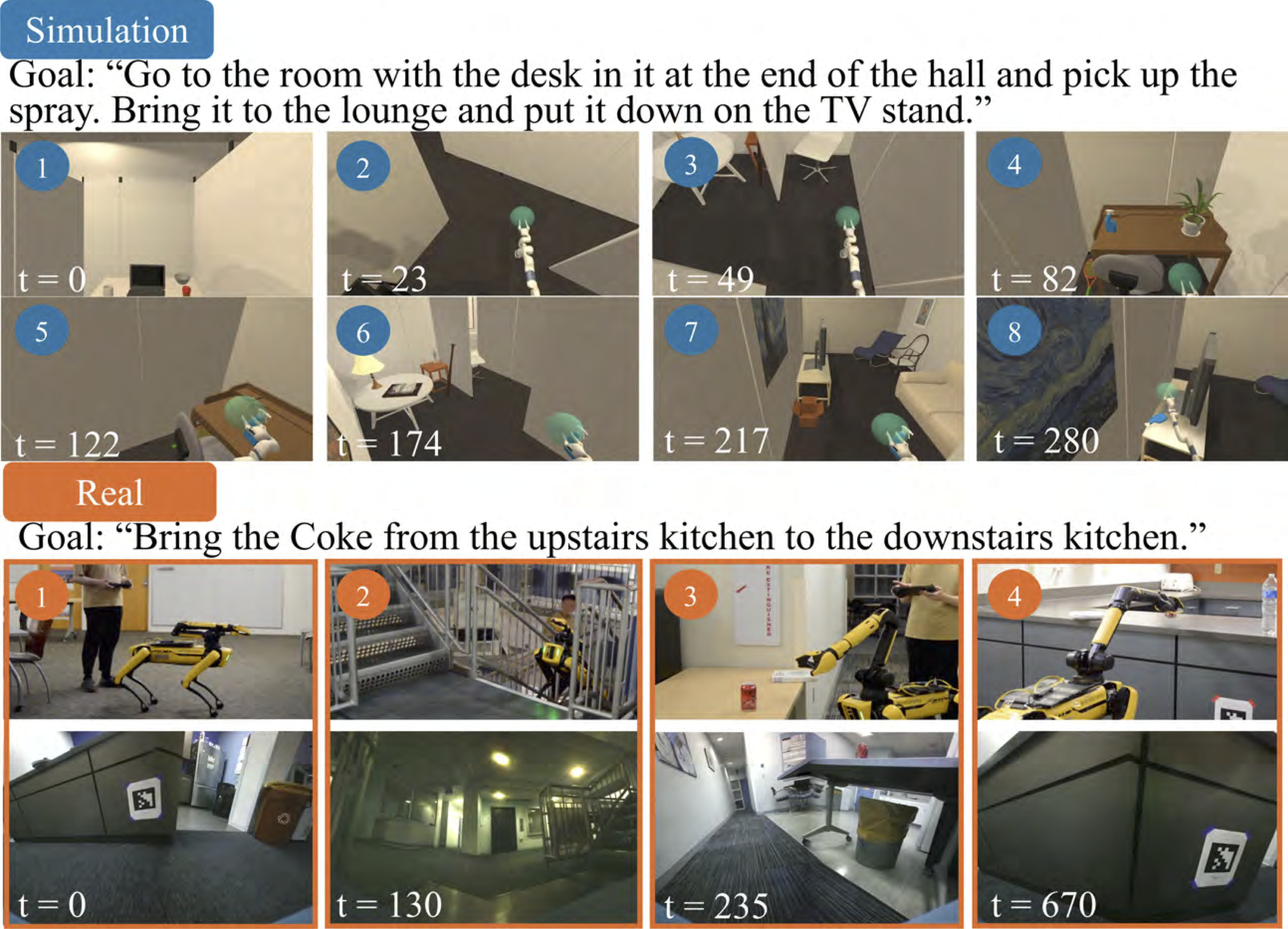

Trajectories

Examples of the Data's Schema

Simulation - at every time step of the 524 trajectories

{

"nl_command": "Find the pepper and put it on top of the green chair with a blue pillow on it.",

"scene": "FloorPlan_Train8_1",

"steps": [

{

"sim_time": 0.19645099341869354,

"wall-clock_time": "15:49:37.334",

"action": "Initialize",

"state_body": [ # robot pose

3.0,

0.9009992480278015,

-4.5,

269.9995422363281

],

"state_ee": [ # end-effector pose

2.5999975204467773,

0.8979992270469666,

-4.171003341674805,

-1.9440563492718068e-07,

-1.2731799533306385,

1.9440386333307377e-07

],

"hand_sphere_radius": 0.05999999865889549

"held_objs": [],

"held_objs_state": {},

"inst_det2D": {

"keys": [ # identified instances in the environment

"Wall_4|0.98|1.298|-2.63",

"Wall_3|5.43|1.298|-5.218",

"RemoteControl|+01.15|+00.48|-04.24",

...],

"values": [ # bounding box coordinates of each instance

[418, 43, 1139, 220],

[315, 0, 417, 113],

[728, 715, 760, 719],

...]

},

"rgb": "./rgb_0.npy", # path of visual data for this timestep

"depth": "./depth_0.npy",

"inst_seg": "./inst_seg_0.npy",

}

]

}

Real - at every time step of the 50 trajectories

{

"language_command": "Take the cup from the table in the dining area which is closest to the stairs and bring it to the table near the

couches in the corner of the big dining room besides the windows.",

"scene_name": "upstairs",

"wall_clock_time": "05:29:40.117",

"left_fisheye_rgb": "./Trajectories/trajectories/data_33/folder_0.zip/left_fisheye_image_0.npy", # path of visual data for this timestep

"left_fisheye_depth": "./Trajectories/trajectories/data_33/folder_0.zip/left_fisheye_depth_0.npy",

"right_fisheye_rgb": "./Trajectories/trajectories/data_33/folder_0.zip/right_fisheye_image_0.npy",

"right_fisheye_depth": "./Trajectories/trajectories/data_33/folder_0.zip/right_fisheye_depth_0.npy",

"gripper_rgb": "./Trajectories/trajectories/data_33/folder_0.zip/gripper_image_0.npy",

"gripper_depth": "./Trajectories/trajectories/data_33/folder_0.zip/gripper_depth_0.npy",

"left_fisheye_instance_seg": "./Trajectories/trajectories/data_33/folder_0.zip/left_fisheye_image_instance_seg_0.npy",

"right_fisheye_instance_seg": "./Trajectories/trajectories/data_33/folder_0.zip/right_fisheye_image_instance_seg_0.npy",

"gripper_fisheye_instance_seg": "./Trajectories/trajectories/data_33/folder_0.zip/gripper_image_instance_seg_0.npy",

"body_state": {"x": 1.3496176111016192, "y": 0.005613277629761049, "z": 0.15747965011090911},

"body_quaternion": {"w": 0.04275326839680784, "x": -0.0008884984706659231, "y": -0.00030123853590331847, "z": 0.999085220522855},

"body_orientation": {"r": -0.003024387647253151, "p": 0.017297610440263775, "y": 3.05395206999625},

"body_linear_velocity": {"x": 0.00015309476140765987, "y": 0.001022209848280799, "z": 0.0001717336942742603},

"body_angular_velocity": {"x": 4.532841128101956e-05, "y": 0.003003578426140623, "z": -0.0046712267592016726},

"arm_state_rel_body": {"x": 0.5535466074943542, "y": -0.00041040460928343236, "z": 0.2611726224422455},

"arm_quaternion_rel_body": {"w": 0.9999685287475586, "x": -0.0011630485532805324, "y": 0.007775876671075821, "z": 0.007775876671075821},

"arm_orientation_rel_body": {"x": -0.0023426745301198485, "y": 0.015549442728134426, "z": -0.0021046873064696214},

"arm_state_global": {"x": 0.7976601233169699, "y": -0.00041040460928343236, "z": 0.2611726224422455},

"arm_quaternion_global": {"w": 0.043804580215426665, "x": -0.008706641097541701, "y": -0.0011317045101892497, "z": 0.9990015291187636},

"arm_orientation_global": {"x": -0.003024387647253151, "y": 0.017297610440263775, "z": 3.05395206999625},

"arm_linear_velocity": {"x": 0.002919594927038712, "y": 0.004658882987521996, "z": 0.012878690074243797},

"arm_angular_velocity": {"x": -0.01867944403436315, "y": 0.02911512882983833, "z": -0.008279345145765714},

"arm_stowed": 1, # Boolean

"gripper_open_percentage": 0.39261579513549805,

"object_held": 0, # Boolean

"feet_state_rel_body": [

{"x": 0.3215886056423187, "y": 0.17115488648414612, "z": -0.5142754912376404},

{"x": 0.32302412390708923, "y": -0.17028175294399261, "z": -0.5178792476654053},

{"x": -0.27173668146133423, "y": 0.16949543356895447, "z": -0.5153297185897827},

{"x": -0.2700275778770447, "y": -0.1685962975025177, "z": -0.5157276391983032}],

"feet_state_global": [

{"x": -0.3341075772867149, "y": -0.14278573670828154, "z": -0.5149532673227382},

{"x": -0.3063631798978494, "y": 0.19752718640765313, "z": -0.518328069669068},

{"x": 0.25719142551156154, "y": -0.19181889447285838, "z": -0.5149682779363334},

{"x": 0.2843717159282008, "y": 0.1451830347804529, "z": -0.5151399962832868}],

"all_joint_angles": {

"fl.hx": 0.010119102895259857,

"fl.hy": 0.7966763973236084,

"fl.kn": -1.576759934425354, ...},

"all_joint_velocities": {

"fl.hx": -0.00440612155944109,

"fl.hy": -0.004167056642472744,

"fl.kn": -0.007508249022066593, ...}

}

Metadata

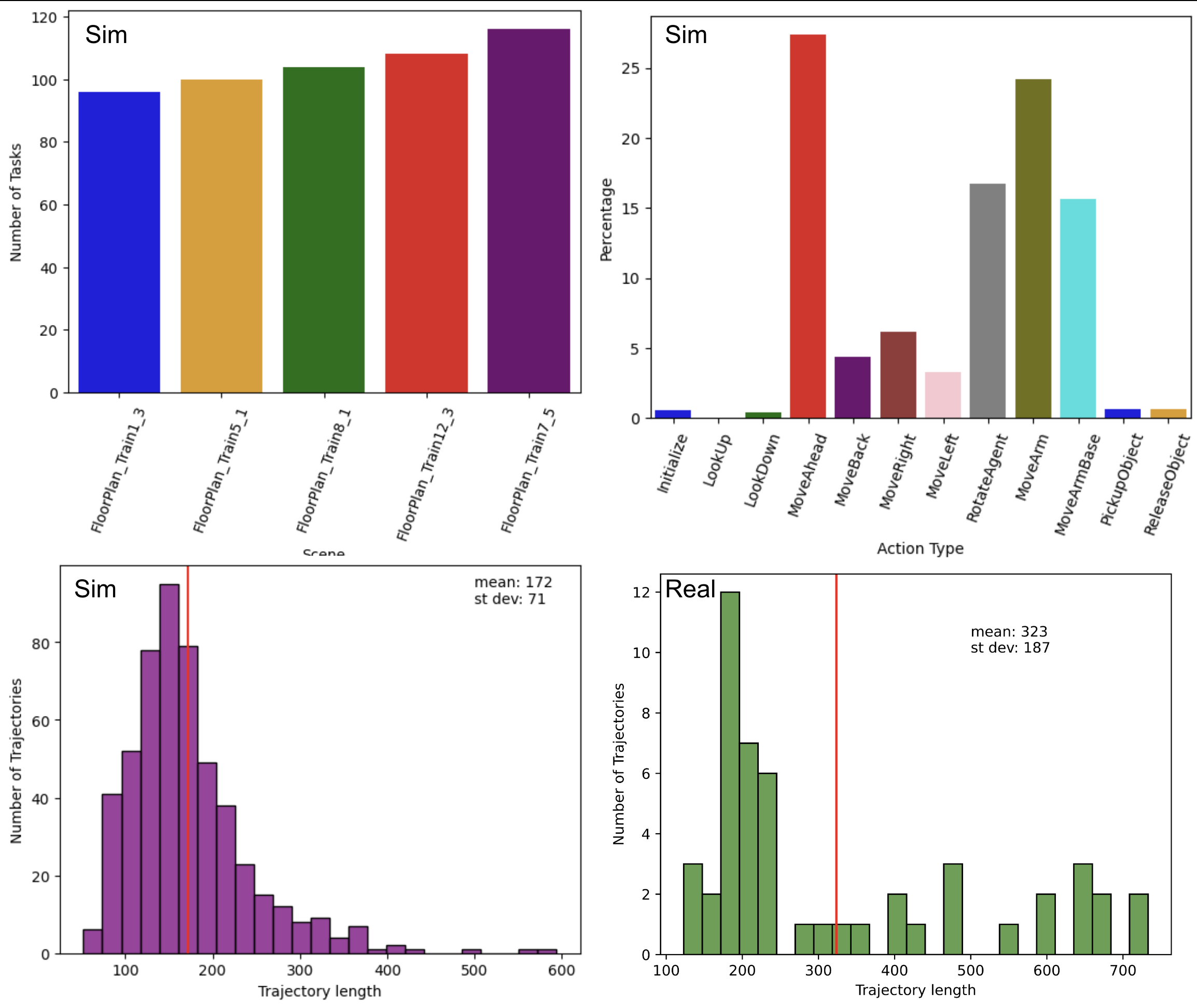

Environment counts, action distribution, and trajectory lengths.

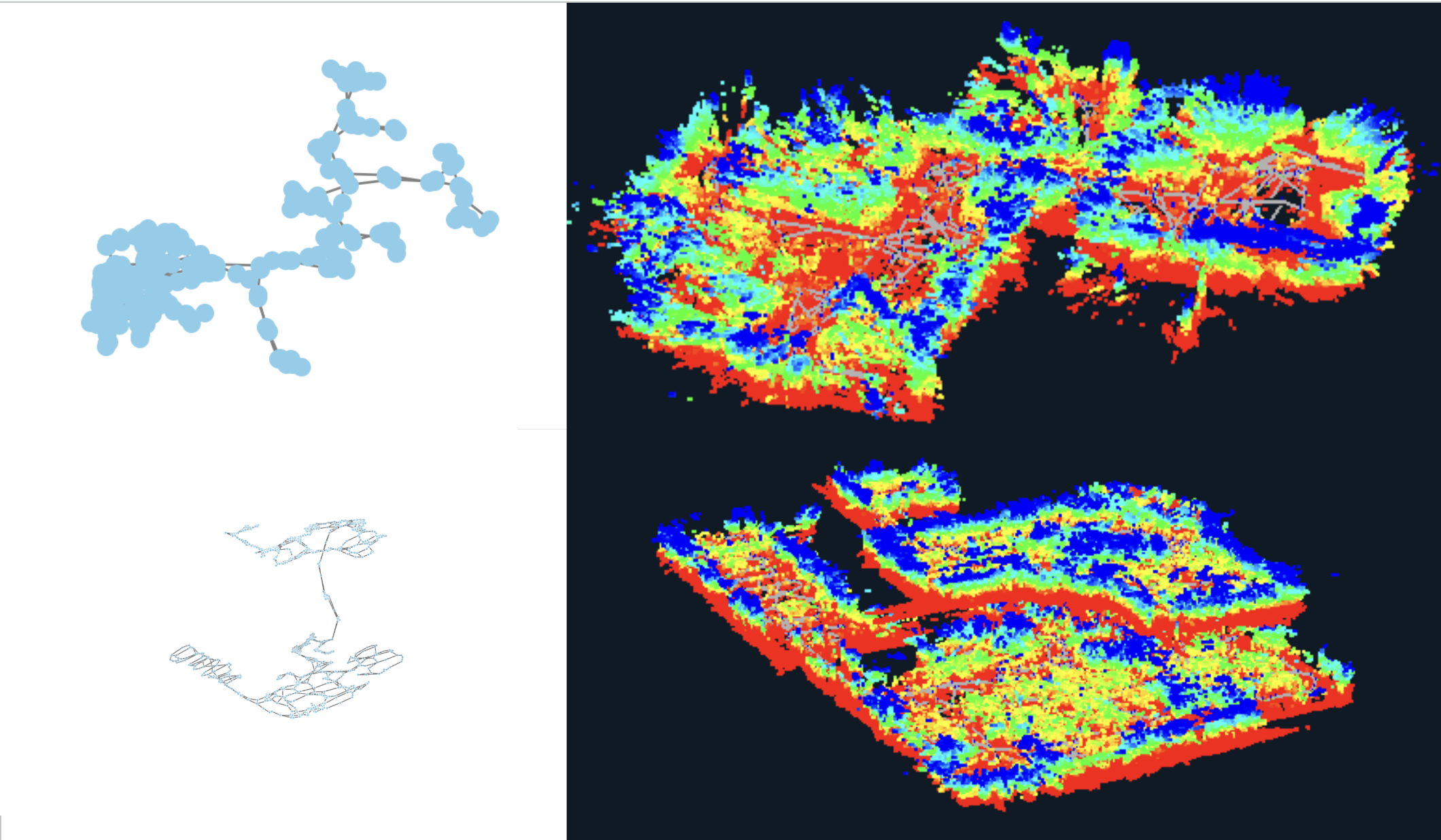

Real-World Maps

The maps of the environments made by the robot to be used for data collection. On the left is the 2D visualization and on the right is a 3D point cloud of the environment. The top row depicts the laboratory and the bottom row depicts the multi-floor university building environments.

Acknowledgements

This work is supported by ONR under grant award numbers N00014-22-1-2592 and N00014-23-1-2794, NSF under grant award number CNS-2150184, and with support from Amazon Robotics. We also thank Aryan Singh, George Chemmala, Ziyi Yang, David Paulius, Ivy He, Lakshita Dodeja, Mingxi Jia, Benned Hedegaard, Thao Nguyen, Selena Williams, Benedict Quartey, Tuluhan Akbulut, and George Konidaris for their help in various phases of work.

BibTeX

Coming Soon!